The threat of fingerprinting means a browser script cannot typically enumerate the locally installed fonts. There is also currently no native way of determining if a font supports a particular codepoint. One would think that determining font support for codepoints would therefore be problematic, but it turns out to be relatively easy for our use-case with Chrome.

Consider the following JavaScript snippet:

var canvas = document.createElement("canvas");

var context = canvas.getContext("2d");

context.font = "10px font-a,font-b,font-c";

var measure = context.measureText(text);

If a character in the "text" string is not supported by "font-a", Chrome text rendering falls back to using "font-b". If "font-b" also doesn't support the character, "font-c" is used. If the character is not supported by "font-c" either, a system default is used.

We can take advantage of this fall back mechanism by using a "blank" font that guarantees to render any glyph as zero-width/zero-mark. Fortunately, there's just such a font already out there: Adobe Blank:

@font-face {

font-family: "blank";

src: url("https://raw.githubusercontent.com/adobe-fonts/

adobe-blank/master/AdobeBlank.otf.woff") format("woff");

}

Now, we can write a function to test a font for supported characters:

function IsBlank(font, text) {var canvas = document.createElement("canvas");var context = canvas.getContext("2d");context.font = `10px "${font}",blank`;var measure = context.measureText(text);return (measure.width <= 0) &&(measure.actualBoundingBoxRight <= -measure.actualBoundingBoxLeft);}

The actual code in universe.js has some optimisations and additional features; see "MeasureTextContext()" and "TextIsBlank()".

Using this technique, we can iterate around some "well-known" fonts and render them where appropriate. For our example of codepoint U+0040:

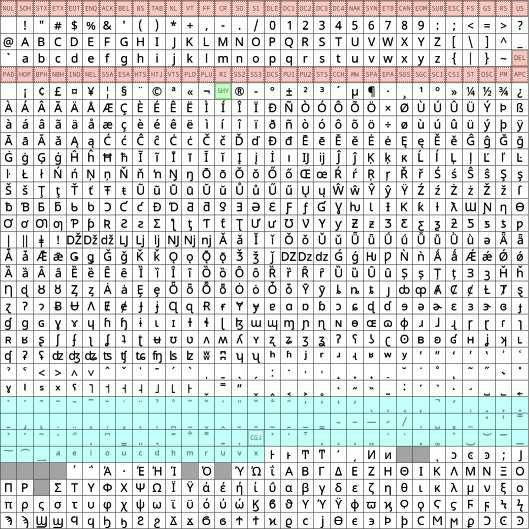

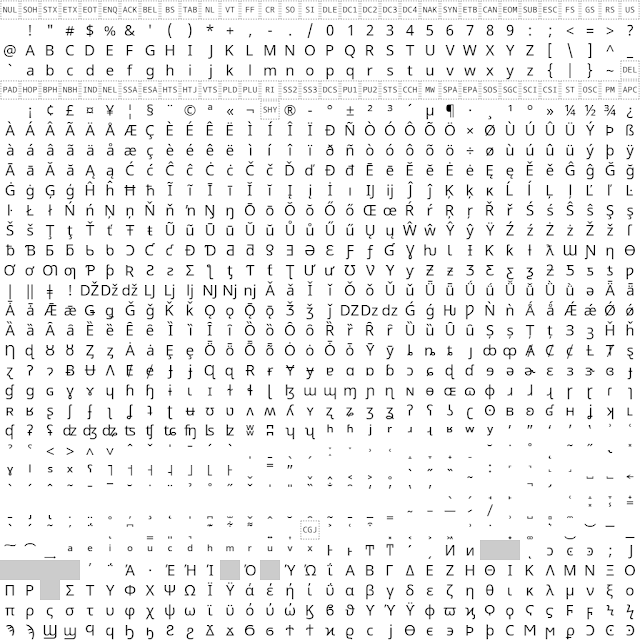

The origins of each of the glyphs above are:

- "notoverse" is the 32-by-32 pixel bitmap glyph described previously.

- "(default)" is the default font used for this codepoint by Chrome. In my case, it's "Times New Roman".

- "(sans-serif)" is the default sans serif font: "Arial".

- "(serif)" is the default serif font: "Times New Roman".

- "(monospace)" is the default monospace font: "Consolas".

- "r12a.io" is the PNG from Richard Ishida's UniView online app.

- "glyphwiki.org" is the SVG from GlyphWiki. It looks a bit squashed because it's a half-width glyph; GlyphWiki is primarily concerned with CJK glyphs.

- "unifont" is the GNU Unifont font. I couldn't find a webfont-friendly source for version 14, so I had to piggyback version 12 from Terence Eden.

- "noto" is a composite font of several dozen Google Noto fonts. See ".noto" in universe.css.

- Subsequent "Noto ..." glyphs are from the corresponding, individual Noto font, in priority order.